Until recently, it was really, really hard for systems to determine user intent from natural language or other cues. Hand-coded rules or simple keyword matching made early interpretation systems brittle. Even when systems understood the words you said, they might not know what to do with them if it wasn’t part of the script. Saying “switch on the lights” instead of “turn on the lights” could mean you stayed in the dark. Don’t know the precise incantation? Too bad.

Large language models have changed the game. Instead of relying on exact keywords or rigid syntax, LLMs just get it. They grasp underlying semantics, they get slang, they can infer from context. For all their various flaws, LLMs are exceptional manner machines that can understand intent and the shape of the expected response. They’re not great at facts (hi, hallucination), but they’re sensational at manner. This ability makes LLMs powerful and reliable at interpreting user meaning and delivering an appropriate interface.

Just like LLMs can speak in whatever tone, language, or format you specify, they can equally speak UI. Product designers can put this superpower to work in ways that create radically adaptive experiences, interfaces that change content, structure, style, or behavior—sometimes all at once—to provide the right experience for the moment.

It turns out that teaching a LLM to do this can be both easy and reliable. Here’s a primer.

Let’s build a call-and-response UI

Bespoke UI is one the 14 experience patterns of Sentient Design, a framework for designing intelligent interfaces. A specific flavor of bespoke UI is “call-and-response UI,” where the system responds to an explicit action—a question, UI interaction, or event trigger—with an interface element specifically tailored to the request.

For example, ChatGPT embeds UI widgets into chat to display or explore content from external apps. Ask for a playlist, and you might get a Spotify widget. Or ask for nearby homes for sale, and you get a Zillow map.

ChatGPT does this for specific app integrations, but this approach works for any interface where you want to present a tailored UI in response to a user request. This Gemini prototype is one of my favorite examples of call-and-response UI, and here’s another: digital analytics platform Amplitude has an “Ask Amplitude” assistant that lets you ask plain-language questions to bypass the time-consuming filters and queries of traditional data visualization. A product manager can ask, “Compare conversion rates for the new checkout flow versus the old one,” and Amplitude responds with a chart to show the data. The system understands the context and then identifies the data to use, the right chart type to style, and the right filters to apply.

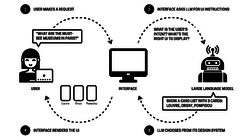

Call-and-response UI is fundamentally conversational: it’s a turn-based UI where you send a question, command, or signal, and the system responds with a UI widget tailored to the context. Rinse and repeat.

This is kind of like traditional chat, except that the language model doesn’t reply directly to the user—it replies to the UI engine, giving it instructions for how to present the response. The conversation happens in UI components, not in text bubbles.

To enable this, the LLM’s job shifts from direct chat to mediating simple design decisions. It acts less as a conversational partner than as a stand-in production designer assembling building-block UI elements or adjusting interface settings. It works like this: the system sends the user’s request to the LLM along with rules about how to interpret it and select an appropriate response. The LLM returns instructions to tell the interface what UI pattern to display. The user never sees the raw LLM response like they would in a text chat; instead the LLM returns structured data that the application can act on to display UI widgets to the user. The LLM talks to the interface, not the user.

That might sound a bit abstract, so let’s build one now. We’ll make a simple call-and-response system to provide city information. The nitty-gritty details are below, but here’s a video that shows how it all comes together:

A starter recipe

Here’s a simple system prompt that tells the LLM how to respond with an appropriate UI component:

The user will ask for questions and information about exploring a city. Your job is to:

- Determine the user’s intent

- Determine if we have enough information to provide a response.

- If yes, determine the available UI component that best matches the user’s intent, and generate a brief JSON description of the response.

- If not, ask for additional information

- Return a response in JSON with the following format (IMPORTANT: All responses MUST be in this JSON format with no additional text or formatting):

{

“intent”: “string: description of user intent”,

“UI”: “string: name of UI component”,

“rationale”: “string: why you chose this pattern”,

“data”: { object with structured data }

}

The prompt tells the system to figure out what the user is looking for and, if it has the info to respond, pick a UI component to match. The system responds with a structured data object describing the UI and content to display; that gives a client-side UI engine what it needs to display the response as an interface component.

The only thing missing is to tell the model about the available UI components and what they’re for—how to map each component to user intent.

## Available UI design patterns

Important!! You may use only these UI components based on the user intent and the content to display. You must choose from these patterns for the UI property of your response:

### Quick Filter

- A set of buttons that show category suggestions (e.g., “Nearby Restaurants,” “Historic Landmarks,” “Easy Walks”). Selecting a filter typically displays a card feed, list selector, or map.

- User intent: Looking for high-level suggestions for the kind of activities to explore.

### Map

- Interactive map displaying locations as pins. Selecting a pin opens a preview card with more details and actions.

- User intent: Seeking nearby points of interest and spatial exploration

### Card Feed

- A list of visual cards to recommend destinations or events. Card types include restaurants, shops, events, and sights. Each card contains an image, short description, and action buttons (e.g., “Add” and “More info” ).

- User intent: Discover and browse recommendations.

I set this up in a custom GPT; try it out to see what it’s like to “talk” to a system like this. The chat transcript would never be shown as-is to users. Instead, it’s the machinery under the surface that translates the user’s asks into machine-readable interface instructions:

Designing with words

In Sentient Design, crafting the prompt becomes a central design activity, at least as important as drawing interfaces in your design tool. This is where you establish the rules and physics of your application’s tiny universe rather than specifying every interaction. Use the prompt to define the parameters and possibilities, then step back to let the system and user collaborate within that carefully crafted sandbox.

The simple prompt example above asks the system to interpret a request, identify the corresponding interface element to display, and turn it into structured data. It’s the essential foundation for creating call-and-response bespoke UI—and a great place to start prototyping that kind of experience. You’re simultaneously testing and teaching the system, building up a prompt to inform the interface how to respond in a radically adaptive way.

Use that same city tour prompt or your own similar instruction, and plug it into your preferred playground platform. Try a few different inputs against the system prompt to see what kind of results you get:

- I want to explore murals and underground galleries in Miami.

- Where should I go to eat street food?

- I only have one afternoon and love history.

As you test, look for patterns in how the system succeeds and fails. Does it consistently understand certain types of intent but struggle with others? Are there ambiguous requests where it makes reasonable but wrong assumptions? These insights shape both your prompt and your interface design—you might need sharper input constraints in the UI, or you might discover the system handles ambiguity better than expected. Keep refining and learning. (This look at our Sentient Scenes project describes the process and offers more examples.)

The goal of this prompt exploration is to build intuition and validate how well the system can understand context and then do the right thing. As the designer, you’ll develop a sense of the input and framing the system needs, along with the kinds of responses you can expect in return. These inputs and outputs become the ingredients of your interface, shaping the experience you will create.

Experiment broadly across the range of activities your system might support. Here are some examples and simple constructions to get you started.

System Prompt Templates: A Pattern Library

| Capability | System Prompt |

|---|---|

| Intent classification | “Determine the user’s intent from the user’s [message or actions], and classify it into one of these intent categories: x, y, z.” |

| Action determination | “Based on the user’s request, choose the most appropriate action to take: x, y, z.” |

| Tool or data selection | “Choose the appropriate tool or data source to fulfill the user’s request: x, y, z.” |

| Manner and tone | “Identify the appropriate tone (x, y, z) to respond to the user’s message, and respond with the [message, color theme, UI element] most appropriate to that tone.” |

| Task list | “Determine the user’s goal, and make a plan to accomplish it in a sequenced list of 3–5 tasks.” |

| Ambiguity detection | “Evaluate if the user’s request is clear and actionable. If it is ambiguous or lacks details, respond with a clarifying question.” |

| Guardrails | “Evaluate the user’s request for prohibited, harmful, or out-of-scope content. If found, respond with a safe alternative or explanation.” |

| Persona alignment | “Respond in the voice, style, and knowledge domain of [persona]. Maintain this character across all replies, and do not break role.” |

| Pattern selection | “Based on the user’s [message or actions], select the best UI pattern to provide or request info as appropriate: x, y, z.” |

| Explainability | “After every answer, include a short explanation of why you chose this response and your confidence level on a 0–100 scale.” |

| Delegation | “If the task is outside your capabilities or should be handled by [human/tool], explain why and suggest or enable the next action.” |

Prompt as design spec

Talking directly to the system is a new aspect of design and part of the critical role that designers play in establishing the behavior of intelligent interfaces. The system prompt is where designers describe how the system should make decisions and how it should interact with users. But this is not only a design activity. It’s also the place where product managers realize product requirements and where developers tell the system how to work with the underlying architecture.

The prompt serves triple duty as technical, creative, and product spec. This common problem space unlocks something special: Instead of working in a linear fashion, everyone can work together simultaneously, riffing off each other’s contributions to formally encode the what and why for how the system behaves.

The prompt serves triple duty as technical, creative, and product spec.

Every discipline still maintains their own specific focus and domains, but far more work happens together. Teams dream up ideas, tailor requirements, design, develop, and test together, with each member contributing their unique perspective. Individuals can still do their thinking wherever they do their best work—sketching in Figma or writing pseudo-code in an editor—but the end result comes together in the prompt.

Constraints make it work

The examples here are deliberately simple, but the principles scale up. Whether you’re building an analytics dashboard that adapts to different questions or a design tool that responds to different creative needs, it’s all the same pattern: constrain the outputs, map them to intents, and let the LLM handle the translation.

Constrain the outputs, map them to intents, and let the LLM handle the translation.

It’s an approach that uses LLMs for what they do best (intent, manner, and syntax) and sidesteps where they’re wobbly (facts and complex reasoning). You let the system make real-time decisions about how to talk—the appropriate interface pattern for the moment—while outsourcing the content to trusted systems. The result fields open-ended requests with familiar and intuitive UI responses.

The goal isn’t to generate wildly different interfaces at every turn but to deliver experiences that gently adapt to user needs. The wild and weird capabilities of generative models work best when they’re grounded in solid design principles and user needs.

As you’ve seen, these prompts don’t have to be rocket science—they’re plain-language instructions telling the system how and why to use certain interface conventions. That’s the kind of thinking and explanation that designers excel at.

In the end, it’s all fundamental design system stuff. Create UI solutions for common problems and scenarios, and then give the designer (a robot designer in this case) the info to know what to use when. Clean, context-based design systems are more important than ever, as is clear communication about what they do. That’s how you wire interface to intent.

So start writing; the interface is listening.

Need help navigating the possibilities? Big Medium provides product strategy to help companies figure out what to make and why, and we offer design engagements to realize the vision. We also offer Sentient Design workshops, talks, and executive sessions. Get in touch.