When Interfaces Design Themselves

By

Josh Clark

Veronika Kindred

Published Sep 30, 2025

Applications that manifest on demand. Interfaces that redesign themselves to fit the moment. Web forms that practically complete themselves. It’s tricky territory, but this strange frontier of intelligent interfaces is already here, promising remarkable new experiences for the designers who can negotiate the terrain. Let’s explore the landscape:

You look cautiously down a deserted street, peering both ways before setting off along a row of shattered storefronts. The street opens to a small square dominated by a bone-dry fountain at its center. What was once a gathering place for the community now serves as potential cover in an urban battlefield.

It’s the world of Counter-Strike, the combat-style video game. But there’s no combat in this world—only… world. It’s a universe created by AI researchers based on the original game. Unlike a traditional game engine with a designed map, this scene is generated frame by frame in response to your actions. What’s around the corner beyond the fountain? Nothing, not yet. That portion of the world won’t be created until you turn in that direction. This is a “world model” that creates its universe on the fly—an experience that is invented as you encounter it, based on your specific actions. World models like Mirage 2 and Google’s Genie 3 are the latest in this genre: name the world you want and explore it immediately.

What if any digital experience could be delivered this way? What if websites were invented as you explored them? Or data dashboards? Or taking it further, what if you got a blank canvas that you could turn into exactly the interface or application you needed or wanted in the moment? How do you make an experience like this feel intuitive, grounded, and meaningful, without spinning into a robot fever dream? It’s not only possible; it’s already happening.

These are radically adaptive experiences

Radically adaptive experiences change content, structure, style, or behavior—sometimes all at once—to provide the right experience for the moment. They’re a cornerstone of Sentient Design, a framework for creating intelligent interfaces that have awareness and agency.

Even conservative business applications can be radical in this way. Salesforce is a buttoned-up enterprise software platform heavy with data dashboards, but it’s made lighter through the selective use of radically adaptive interfaces. In the company’s generative canvas pilot, machine intelligence assembles certain dashboards on the fly, selecting and arranging precisely the metrics and controls to anticipate user needs. The underlying data hasn’t changed—it’s still pulled from the same sturdy sources—but the presentation is invented in the moment.

Instead of wading through static templates built through painstaking manual configuration, users get layouts uniquely tailored to the immediate need. Those screens can be built for explicit requests (“what’s the health of the Acme Inc account?”) or for implicit context. The system can notice an upcoming sales meeting on your calendar, for example, and compile a dashboard with that client’s pipeline, recent communications, and relevant market signals. Every new context yields a fresh, first-time-ever arrangement of data.

The system pulls information toward the user rather than demanding that they scramble through complex navigation to find what they need. We’ll explore a bunch more examples below, but first the big picture and its implications…

Conceived and Delivered in Real Time

This free-form interaction might seem novel, but it builds on our oldest and most familiar interaction: conversation. In dialogue, every observation and response can take the conversation in unpredictable directions. Genuine conversations can’t be designed in advance; they are ephemeral, created in the moment.

Radically adaptive experiences apply that same give-and-take awareness and agency to any interface or interaction. The experience bends and flows according to your wants and needs, whether explicitly stated (user command) or implicitly inferred (behavior and context).

Okay, but: if the design is created in real time, where does the designer fit in? Traditionally, it’s been the designer’s job to craft the ideal journey through the interface, the so-called happy path. You construct a well-lit road to success, paved with carefully chosen content and interactions completely under your control. That’s the way interface design has worked since the get-go.

Not anymore—or at least not entirely. With Sentient Design, the designer allows parts of the path to pave themselves, adapting to each traveler’s one-of-a-kind footsteps. The designer’s job shifts from crafting each interaction to system-level design of the rules and guardrails to help AI tailor and deliver these experiences. What are the design patterns and interactions the system can and can’t use? How does it choose the right pattern to match context? What is the manner it should adopt? The work is behavior design… not only for the user but for the system itself.

For designers, creating this system is like being a creative director. You give the system the brief, the constraints, and the design patterns to use. It takes tight, careful scoping, but done right, the result is an intelligent interface with enough awareness and agency to make designerly decisions on the spot.

Creating this system is like being a creative director. You give the system the brief, the constraints, and the design patterns to use.

This is not ye olde website. For designers, this can seem unsettling and out of control. The risk of radically adaptive interfaces is that they can become experiences without shape or direction. That’s where intentional design comes in: to conceive and apply thoughtful rules that keep the experience coherent and the user grounded.

This design work is weird and hairy and different from what came before. But don’t be intimidated; the first steps are familiar ones.

Let’s start gently: casual intelligence

Begin with what you already know. Adaptive, personalized content is nothing new; many examples are so familiar they feel downright ordinary. When you visit your favorite streaming service or e-commerce website, you get a mix of recommendations that nobody else receives. Ho hum, right? This is a radically adaptive experience, but it’s nothing special anymore; it’s just, you know, software. Let’s apply this familiar approach to new areas.

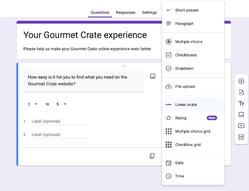

Adaptive content can drive subtle interaction changes, even in old-school web forms. In Google Forms, the survey-building tool, you choose from a dozen answer formats for each question you add: multiple choice, checklist, linear scale, and so on. It’s a necessary but heavy bit of friction. To ease the way, Google Forms sprinkles machine intelligence into that form field. As you type a question, the system suggests an answer type based on your phrasing. Start typing “How would you rate…,” and the default answer format updates to “Linear scale.” Under the hood, machine learning categorizes that half-written question based on the billions of other question-answer pairs that Google Forms has processed. The interface doesn’t decide for you, but it tees up a smart default as an informed suggestion.

This is casual intelligence. Drizzle some machine smarts onto everyday content and interface elements. Even small interventions like these add up to a quietly intelligent interface that is aware and adaptive. Easing the frictions of routine interactions is a great place to get started. As operating systems begin to make on-device models available to applications, casual intelligence will quickly become a “why wouldn’t we?” thing. A new generation of iPhone apps is already using on-device models in iOS to do free, low-risk, private actions like tagging content, proposing content titles, or summarizing content.

But don’t feel limited to the mundane. Casual intelligence can ease even complex and high-stakes domains like health care. You know that clipboard of lengthy, redundant forms you fill out at the doctor’s office to describe your health and symptoms? The Ada Health app uses machine intelligence to slim that hefty one-size-fits-all questionnaire and turn it into a focused conversation about relevant symptoms. The app adapts its questions as you describe what you’re experiencing. It uses your description, history, and its own general medical knowledge to steer toward relevant topics, leaving off-topic stuff alone. The result is at once thorough and efficient, saving the patient time and outfitting the medical staff with the right details for a meaningful visit.

Start with what you know. As you design the familiar interactions of your everyday practice, ask yourself how this experience could be made better with a pinch of awareness and a dash of casual intelligence. Think of features that suggest, organize, or gently nudge users forward. Small improvements add up. Boring is good.

Bespoke UI and on-demand layouts

If machine intelligence can guide an individualized path through a thicket of medical questions to shape a survey, it’s not a big leap to let it craft an interface layout and flow. Both approaches frame the “conversation” of the interaction, tailoring the experience to the user’s immediate needs and context. The hard part here is striking a balance between adaptability and cohesiveness. How do you let the experience move freely in appropriate directions while keeping the thing on the rails? For radically adaptive experiences to be successful, they have to bend to the immediate ask while staying within user expectations, system capabilities, and brand conventions.

Let’s revisit the Salesforce example. The interface elements are chosen on the fly but only from a curated collection of UI patterns from Salesforce’s design system. This includes familiar elements like tables, charts, trend indicators, and other data visualization tools. While the layout itself may be radically adaptive, the individual components are templated for visual and functional consistency—just like a design system provides consistent tools for human designers, too.

This is a conservative and reliable approach to the bespoke UI experience pattern, one of 14 Sentient Design experience patterns. Bespoke UIs compose their own layout in direct response to immediate context. This approach relies on a stable set of interface elements that can be remixed to meet the moment. In the Salesforce version of bespoke UI, adaptability lives within the specific constraints of the dashboard experience, creating a balance between real-time flexibility and a grounded user experience.

When you ease the constraints, the bespoke UI pattern leads to more open-ended scenarios. Google’s Gemini team developed a prototype that deploys a bespoke UI inside a chat context—but it doesn’t stay chat for long. As in any chat experience, you can ask anything; the demo starts by asking for help planning a child’s birthday party. Instead of a text reply, the system responds with an interactive UI module—a purpose-built interface to explore party themes. Familiar UI components like cards, forms, and sliders materialize to help you understand, browse, or select the content.

Although the UI elements are familiar, the path is not fixed. Highlighting a word or phrase in the Google prototype triggers a contextual menu with relevant actions, allowing you to pivot the conversation based on any random word or element. This blends the flexibility of conversation with the grounding of familiar visual UI elements, creating an experience that is endlessly flexible yet intuitive.

Successful bespoke UI experiences rely on familiarity and a tightly constrained set of UI and interaction patterns. The Gemini example succeeds because it has a very small number of UI widgets in its design system, and the system was taught to match specific patterns to specific user intent. Experiences like this are open-ended in what they accept for input, but they’re constrained in the language they produce. Our Sentient Scenes project is a fun example. Provide a scene or theme, and Sentient Scenes “performs” the scene for you: a playful little square acts it out, and the scenery adapts, too. Color, typography, mood, and behavior align to match the provided scene. The input is entirely open-ended and the outcomes are infinite, but the format constraints ensure that the experience is always familiar despite the divergent possibility.

The same principles and opportunities apply beyond graphical UI, too. What happens, for example, when you apply bespoke UI to a podcast, an experience that is typically fixed and scripted? Walkcast is an individualized podcast that tells one-of-a-kind stories seeded by your physical location. While other apps have attempted something similar by finding and reading Wikipedia entries, Walkcast goes further and in new directions. The app weaves those location-based facts into weird and discursive stories that leap from local trivia to musings about nature, life goals, local personalities, and sometimes a few tall tales. There’s a fun fractured logic connecting those themes—it feels like going on a walk with an eccentric friend with a gift for gab—and the more you walk, the more the story expands into adjacent topics. It’s an example of embracing some of the weird unpredictability of machine intelligence as an asset instead of a liability. And it’s an experience that is unique to you, radically adaptive to your physical location.

Each of these systems reimagines the relationship between user and interface, but all stay grounded within rules that the system must observe for presentation and interaction. Things get wilder, though, when you let the user define (and bend) those rules to create the universe they want. And so of course we should talk about cartoons.

The Intelligent Canvas

Generations of kids watched Wile E. Coyote paint a tunnel entrance on a solid cliff face, only to watch Road Runner zip right through—the painted illusion somehow suddenly real. Classic cartoons are full of these visual meta gags: characters drawing or painting something into existence in the world around them, the cartoonist’s self-conscious nod to the medium as a magic canvas where anything can happen.

A dash of machine intelligence brings the same transformative potential to digital interfaces, only this time putting the “make it real” paintbrush in your hands. What if you could draw an interface and start using it? Or manifest the exact application you need just by describing it? That’s Sentient Design’s intelligent canvas experience pattern, where radical adaptability dissolves the boundaries between maker and user.

The Math Notes feature in iPad, for example, effectively reimagines the humble calculator as a dynamic surface to create your own one-off calculator apps. Scrawl variables and equations on the screen, drop in some charts, and they start updating automatically as you make changes. You define both the interface and the logic just by writing and drawing. One screen might scale recipe portions for your next dinner party, while another works out equations for your physics class. By using computer vision and machine learning to parse your writing and the equations, the experience lets you doodle on-demand interfaces with entirely new kinds of layouts.

Functionally, it’s just simple spreadsheet math, but the experience liberates those math smarts from the grid. Designer, ask yourself: what platform or engine is your application built on? What are the opportunities for users to instantly create their own interfaces on top of that engine for purpose-built applications?

As flexible as it is, Math Notes is limited to creating interactive variables, equations, and charts—the stuff of a calculator app. What if you could draw anything and make it interactive? tldraw is a framework for creating digital whiteboard apps. Its team created a “Make Real” feature to transform hand-drawn sketches or wireframes into interactive elements built with real, live functional code. Sketch a rough interface—maybe a few buttons, some input fields, a slider—and click the “Make Real” button to transform your drawing into working, interactive elements. Or get more ambitious: draw a piano keyboard to create a playable instrument, or sketch a book to make a page-flipping prototype. The whiteboard evolves from a space for visualizing ideas to a workshop for creating purpose-built interactions.

When you fold in more sophisticated logic, you start building full-blown applications. Chat assistants like Claude, Gemini, and ChatGPT let you spin up entire working web applications. If you’re trying to figure out how color theory works, ask Claude to make a web app to visualize and explore the concepts. In moments, Claude materializes a working web app for you to use right away. Looking for something a little more playful? Ask Claude to spin up a version of the arcade classic Asteroids, and suddenly you’re piloting a spaceship through a field of space rocks. To make it more interesting, ask Claude to “add some zombies," and green asteroids start chasing your ship. Keep going: “Make the lasers bounce off the walls.” You get the idea; the app evolves through conversation, creating a just-for-you interactive experience.

Instead of downloading apps designed for general tasks, in other words, the intelligent canvas experience lets you sketch out exactly what you need. Taken all the way to its logical conclusion, every canvas (or file or session) could become its own ephemeral application—disposable software manifested when you need it and discarded when you don’t. Like that Counter-Strike simulation world, these intelligent canvas experiences describe universes that don’t exist until someone explores them or describes them.

That’s the vision of “Imagine with Claude,” a research preview from Anthropic. Give the prototype a prompt to spin up an application idea, and Imagine with Claude generates the application as you click through it—laying the track in front of the proverbial locomotive. “It generates new software on the fly. When we click something here, it isn’t running pre-written code, it’s producing the new parts of the interface right there and then,” Anthropic says in its demo. “Claude is working out from the overall context what you want to see.… This is software that generates itself in response to what you need rather than following a predetermined script. In the future, will we still have to rely on pre-made software, or will we be able to create whatever software we want as soon as we need it?”

From searching to manifesting

Over the last few decades, the method to discover content and applications has evolved from search to social curation to algorithmic recommendation. Now this new era of interaction design allows you to manifest. Describe the thing that you want, and the answer or artifact appears. In fact, thanks to the awareness and anticipation of these systems, you may not even have to ask at all.

That’s a lot! We’ve traveled a long way from gently helpful web forms to imagined-on-the-spot applications. That’s all the stuff of Sentient Design. Radically adaptive experiences take many forms, from quietly helpful to wildly imaginative—book-end extremes of a sprawling range of possibilities.

While the bespoke UI and intelligent canvas patterns represent the wildest frontier, radical adaptability also powers more focused experiences. Sentient Design offers a dozen more patterns from alchemist agents to non-player characters to sculptor tools, and more.

What binds all of these experience patterns together is the radically adaptive experience that shifts away from static, one-size-fits-all interfaces. For designers, your new opportunity is to explore what happens when you can add awareness, adaptability, and agency to any interaction. Sometimes the answer is to add casual intelligence to existing experiences. Other times it means reimagining the entire interaction model. Either way, the common goal is to deliver value by providing the right experience at the right moment.

But real talk: this is hard. It doesn’t “just work.” Real-world product development is challenging enough, and the quirky, unpredictable nature of AI has to be managed carefully. This is delicate design material, so it’s worth asking a practical question:

Can self-driving interfaces be trusted?

An interface that constantly changes risks eroding the very consistency and predictability that helps users build mastery and confidence. Unchecked, a radically adaptive experience can quickly become a chaotic and untrustworthy one.

If interfaces are redesigning themselves on the fly, how do users keep their bearings? What latitude will you give AI to make design decisions, and with what limitations? When every experience is unique, how do you ensure quality, coherence, and user trust? And how does the system and its users recover when things go sideways?

This stuff takes more than sparkles and magical thinking; it takes careful and intentional effort. You have to craft the constraints as carefully as the capabilities; you design for failure as much as for success. (Just ask Wile E. Coyote.)

Radically adaptive experiences are new, but answers are emerging. We explore those answers in depth in our upcoming book, Sentient Design with a wealth of principles and patterns, including two chapters on defensive design. All of it builds on familiar foundations. The principles of good user experience—clarity, consistency, sharp mental models, user focus, and user control—remain as important as ever.

What astonishing new value can you create?

Let’s measure AI not by the efficiencies it wrings out but by the quality and value of the experiences it enables.

What becomes possible for your project when you weave intelligence into the interface itself? What astonishing new value can you create by collapsing the effort between intent and action? That’s the opportunity that unlocks what’s next.

Need help navigating the possibilities? Big Medium provides product strategy to help companies figure out what to make and why, and we offer design engagements to realize the vision. We also offer Sentient Design workshops, talks, and executive sessions. Get in touch.