What does it mean to weave intelligence into an interface? Instead of treating AI as a tool, what if it were used as a design material? Those questions inspired us to make Sentient Scenes, a playful exploration of Sentient Design and radically adaptive experiences.

Sentient Scenes features an adventurous little square as hero and protagonist. You describe a scene—anything from “underwater adventure” to “Miami Vice” to “computer glitch"—and the interface transforms in response. The square character “acts” the scene, and the scenery adapts, too: the colors, typography, mood, and behavior of the interface all shift to match the scenario.

Request a ballet scene, and the square twirls gracefully. Ask for a haunted house, and it jitters nervously. Each prompt creates a unique, ephemeral scene just for that moment. The interface adapts to your one-off request and then moves on to the next.

Why We Made It

We built this little side project as a way to explore and teach these Sentient Design themes and opportunities:

Intelligent interfaces beyond chat. Instead of creating ever more chatbots, we seek to embed intelligence within the interface itself. For this project, our goal was to create a canvas whose style, mood, and manner adapt based on user intent. Instead of an experience for talking about a scene, the experience becomes the scene you describe. (This simple version of the bespoke UI pattern is one of many kinds of Sentient Design’s radically adaptive interfaces.)

Personality without anthropomorphism. We’re interested in establishing presence without aping human behavior. Here, we deployed simple animation to suggest personality without pretending to be human.

Awareness of context and intent: More than just following commands, we want to create systems that infer what you mean. Here, the system understands your prompt’s emotional and thematic qualities—not just its literal meaning. When you request “Scooby Doo in a haunted house,” it recognizes the genre (cartoon mystery), mood (spooky but playful), and motion patterns (erratic, fearful) appropriate to that scenario.

Easy guidance for exploration. We want intelligent interfaces to be simple to pick up. For this project, we used the best-practice “nudge” UI design pattern—quick-click prompts to help new users get started with useful examples.

Open-ended experiences within constraints. We want to marry the open-endedness of chat within grounded experiences defined by simple technical boundaries. For this project, the result is a generator of simple scenes that are at once similar but full of divergent possibility.

Sentient Scenes may be playful, but its possibilities are serious. These same principles power self-assembling dashboards like Salesforce’s generative canvas. They support on-demand applications like iPad’s math notes, Claude’s artifacts, or tldraw’s Make Real feature. They underlie bespoke UI like this Google Gemini demo. Like Sentient Scenes, these radically adaptive experiences demonstrate the emerging future of hyper-individualized interaction design.

How It Works

Sentient Scenes is kind of like chatting with AI, except that the system doesn’t reply directly to your prompt. Instead, it replies to the UI with instructions for how the interface should behave and change.

The UI results are impactful, but it’s simple stuff under the hood. The project is made of HTML, CSS, and vanilla JavaScript. The UI communicates with OpenAI via a thin PHP web app, which responds to user prompts with a JSON object that gives the client-side application the info it needs to update its UI. Those instructions are straightforward: update CSS values for background and foreground color; update the text caption; apply a new font; and add a CSS animation and glow to the square.

The instructions for choosing and formatting the values are handled in a prompt from the simple PHP web app. The prompt starts like so:

You are a scene generation assistant that creates animated stories. Your output defines a scene by controlling a character (a square div) that moves based on the user’s description. The character always starts at the center of the viewport. You will provide the colors, font, animation, and text description to match the story’s topic and mood.

## Response guidelines

Generate a single JSON object (no other text) with these exact properties to define the scene’s **mood, movement, and atmosphere**. Return only a valid JSON object, formatted exactly as shown. Do not include explanations, extra text, or markdown headers.

{ "background": "string (6-digit hex color for the scene background)", "content": "string (6-digit hex color for character and text, must meet WCAG 2.1 AA contrast with background)", "shadow": "string (valid CSS box-shadow value for atmosphere)", "caption": "string (must start with a relevant emoji and end with '...')", "font-family": "string (only standard web-safe fonts or generic families)", "keyframes": "string (CSS @keyframes block using percentage-based transforms)", "animation": "string (CSS animation property using 'infinite')", "fallback": "string (one of: bounce, float, jitter, pulse, drift)" }

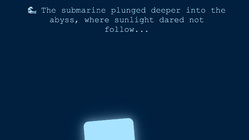

For example, if you enter “submarine descends,” the system might respond like so:

{

"background": "#0A3D62",

"content": "#E9F3FB",

"shadow": "0 0 1.5vw rgba(233, 243, 251, 0.4), 0 0 3vw rgba(233, 243, 251, 0.2)",

"caption": "🌊 The tiny submersible creaked under pressure as it sank deeper into the silent, unexplored abyss...",

"font-family": "Courier New, monospace",

"keyframes": "@keyframes descend { 0% { transform: translateX(0) translateY(0) rotate(0deg); } 25% { transform: translateX(5vw) translateY(30vh) rotate(5deg); } 50% { transform: translateX(-10vw) translateY(40vh) rotate(-10deg); } 75% { transform: translateX(5vw) translateY(30vh) rotate(5deg); } 100% { transform: translateX(0) translateY(0) rotate(0deg); } }",

"animation": "descend 10s ease-in-out infinite",

"fallback": "float"

}

The web app takes these values and applies them to the interface. The deep blue background, the downward movement pattern, and the nautical-themed caption all work together to create the feeling of a submarine descent. The UI acts out the scene.

The prompt gives specific technical and format guidance for the responses of each of those UI values, but it also gives artistic and creative guidance. For example, for movement the prompt suggests animation guidelines like these:

- Action: Large, dynamic movements (±30–45vw horizontally, ±25–40vh vertically). Create diagonal paths across all quadrants.

- Peaceful: Gentle movements (±15–25vw, ±10–20vh). Use figure–8 or circular patterns.

- Suspense: Small, rapid movements (±5–10vw, ±5–10vh) with unpredictable direction changes.

- Sleep/Dreamy: Combine gentle scaling (0.8–1.2) with slow drifting (±15vw, ±15vh).

The prompt likewise guides font selection with context-specific recommendations for web-safe font families:

- Kid-Friendly: “ui-rounded, Comic Sans MS, cursive”

- Sci-Fi: “Courier New, monospace”

- Horror: “fantasy, Times New Roman, serif”

- Zen: “ui-rounded, sans-serif”

The result is a system that can interpret and represent any scenario through simple visual and motion language.

Prompt as creative guide and requirements doc

The prompt serves triple duty as technical, creative, and product director. It used to be that programming app behavior was the exclusive domain of developers. Now designers and product folks can get in the mix, too, just by describing how they want the app to work. The prompt becomes a hybrid artifact to run the show: part design spec, part requirements doc, part programming language.

For Sentient Scenes, crafting these prompts became a central design activity—even more important than tinkering in Figma, which we hardly touched. Instead, we spent significant time in ChatGPT and Claude iterating on prompt variations to find the sweet spot of creativity and reliability.

We started with informal prompts to see what kinds of responses we’d get. We asked OpenAI questions like:

If I give you a theme like ‘horror movie’, how would you animate my character? What colors would you use? What would the caption say?

And then we started telling it to format those responses in a structured way, starting with Markdown for readability, so responses to our prompts came back like this:

- background: #0D0D0D (Jet Black)

- foreground: #D72638 (Amaranth Red)

- caption: “The shadows twisted and crawled, whispering secrets no living soul should hear...”

- emoji: 🩸

Creepy, right? These explorations became the foundation for our system prompt, which evolved into a comprehensive creative and technical brief that we deliver to the system. It meant that we could test the implementation details before writing a single line of code.

Crafting these prompts became a central design activity—even more important than tinkering in Figma, which we hardly touched.

As we became more satisfied with the results, we added new formatting instructions to the prompt to help it talk more seamlessly with the UI engine, the front-end code that draws the interface. We told the system to respond only in JSON objects with the CSS values and content prompts to insert into specific slots in the interface. It’s fun and challenging work—getting a probablistic system to talk to a deterministic one: a “creative” system organizing itself to speak in API.

This is where product design is moving. Savvy designers now sketch prompts in addition to visual elements, exploring the boundaries of open-ended, radically adaptive experiences. The work becomes more meta: establishing the rules and physics of a tiny universe rather than specifying every interaction. The designer defines parameters and possibilities, then steps back to let the system and user collaborate within that carefully crafted sandbox.

Try It Yourself

We built Sentient Scenes as a demo and as a teaching tool. Play with it as a user, and then explore how it works as a product designer. Install it on your own server; it’s built with plain-old web technologies (no frameworks, just vanilla JavaScript and a simple PHP backend with minimal requirements).

This doesn’t have to be complicated. On the contrary, our goal with this little project was to show how simple it can be. This stuff is much more a challenge of imagination than of technology. Go splash in puddles; explore, and have a little fun. Sentient Scenes is a small demonstration of the big possibilities of Sentient Design

About Sentient Design

Sentient Design is the already-here future of intelligent interfaces: AI-mediated experiences that feel almost self-aware in their response to user needs. These experiences are conceived and compiled in real time based on your intent in the moment—experiences that adapt to people, instead of forcing the reverse.

Sentient Design describes not only the form of these new user experiences but also a framework and a philosophy for working with machine intelligence as a design material.

The Sentient Design framework was created by Big Medium’s Josh Clark and Veronika Kindred as the core of the agency’s AI practice. Josh and Veronika are also authors of Sentient Design, the forthcoming book from Rosenfeld Media.

Ready to bring these concepts to your products? We help companies develop meaningful AI-powered experiences through strategy, design, and development services. Our Sentient Design workshops can also level up your team’s capabilities in this emerging field. Get in touch to explore how Sentient Design can transform your user experiences.