Systems Smart Enough To Know When They're Not Smart Enough

By

Josh Clark

Published Mar 13, 2017

Our answer machines have an over-confidence problem. Google, Alexa, and Siri often front that they’re providing a definitive answer to questions when they’re on shaky ground—or outright wrong.

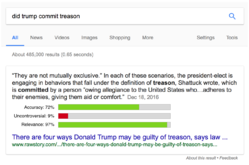

Google’s Featured Snippets Are Worse Than Fake News, writes Adrianne Jeffries, pointing out the downsides of Google’s efforts to provide what Danny Sullivan calls the “one true answer” as fast as possible. About 15% of Google searches offer a featured snippet, that text excerpt that shows up inside a big bold box at the top of the results. It’s presented as the answer to your question. “Unfortunately, not all of these answers are actually true,” Jeffries writes. You know, like this one:

The problem is compounded in voice interfaces like Echo or Google Home, where just a single answer is offered, giving the impression that it’s the only answer.

There are two problems here: bad info and bad presentation. I’ve got some thoughts on how designers of data-driven interfaces can get better at the presentation part to help caution users about inevitable bad info.

First, let’s lay out why all of this is troubling in the first place.

Crummy answers

Both Jeffries and Sullivan offer a slew of stinkers given by Google as the one true answer: presidents are klan members, dinosaurs lived a few thousand years ago, Obama is king of America. And then there’s this truly horrifying result, since removed by Google, for the question, “are women evil?”

The worst of these cases tend to result in contentious areas where the algorithm has been gamed. In other cases, it’s just stright-up error; Google finds a page with good info but extracts the wrong details from it, like its bad answer to the innocuous question, “how long does it take to caramelize onions?” As Tom Scocca writes:

Not only does Google, the world’s preeminent index of information, tell its users that caramelizing onions takes “about 5 minutes”—it pulls that information from an article whose entire point was to tell people exactly the opposite. A block of text from the Times that I had published as a quote, to illustrate how it was a lie, had been extracted by the algorithm as the authoritative truth on the subject.

Something similar might be behind the exactly-backwards response Alexa gave John Gruber when he asked, “how do you make a martini?”

“The martini is a cocktail made with 1 part gin and 6 parts vermouth.”

Those of you who enjoy a martini know that that recipe is backwards, and would make for a truly wretched drink — the International Bartenders Association standard recipe for a dry martini calls for 6 parts gin to 1 part vermouth. … “I don’t know, go check Wikipedia” is a much better response than a wrong answer.

Bad answers and unwarranted confidence

Let it first be said that in the billions of requests these services receive per day, these examples are rare exceptions. Google, Siri, Alexa, and the rest are freakin’ magic. The fact that they can take an arbitrary request and pluck any useful information at all from the vast depths of the internet is amazing. The fact that they can do it with such consistent accuracy is miraculous. I can forgive our answer machines if they sometimes get it wrong.

It’s less easy to forgive the confidence with which the bad answer is presented, giving the impression that the answer is definitive. That’s a design problem. And it’s one that more and more services will contend with as we build more interfaces backed by machine learning and artificial intelligence.

It’s less easy to forgive the confidence with which the bad answer is presented, giving the impression that the answer is definitive.

It’s also a problem that has consequences well beyond bad cocktails and undercooked onions—especially in the contested realms of political propaganda where a full-blown information war is underway. There, search results are a profoundly effective battleground: a 2014 study in India (PDF) found that the order and content of search rankings could shift voting preferences of undecided voters by 20%.

The confident delivery of bad information can also stoke hate and even cost lives. In The Guardian, Carole Cadwalladr describes going down a dark tunnel when she stumbled on Google’s search suggestion, “are Jews evil?”

It’s not a question I’ve ever thought of asking. I hadn’t gone looking for it. But there it was. I press enter. A page of results appears. This was Google’s question. And this was Google’s answer: Jews are evil. Because there, on my screen, was the proof: an entire page of results, nine out of 10 of which “confirm” this.

The convicted mass-murderer Dylann Roof ran into a similar set of gamed results when he googled “black on white crime” and found page after page of white-supremacist propaganda at the top of the results. He later pinpointed that moment as the tipping point that radicalized him, eventually leading him to kill nine people in the Charleston church massacre. “I have never been the same since that day,” he wrote of that search result.

Responsible design for slippery data

Many of us treat the answer machines’ responses to our questions uncritically, as fact. The presentation of the answer is partly to blame, suggesting a confidence and authority that may be unwarranted. The ego of the machines peeks out from their “one true answer” responses.

How can we add some productive humility to these interfaces? How can we make systems that are smart enough to know when they’re not smart enough?

I’m not sure that I have answers just yet, but I believe I have some useful questions. In my work, I’ve been asking myself these questions as I craft and evaluate interfaces for bots and recommendation systems.

- When should we sacrifice speed for accuracy?

- How might we convey uncertainty or ambiguity?

- How might we identify hostile information zones?

- How might we provide the answer’s context?

- How might we adapt to speech and other low-resolution interfaces?

When should we sacrifice speed for accuracy?

The answer machines are in an arms race to provide the fastest, most convenient answer. It used to be that Google delivered a list of pages that were most likely to contain the information you were looking for. Want the weather for New York City? Here are links to pages that will tell you. Then Google started answering the question itself: no need to click through to another page, we’ll show the forecast above the old-school search results. These days, Google doesn’t even bother waiting for you to search. For certain searches, Chrome shows you the answer right in the search bar:

I often say that the job of designers is to collapse the time between intent and action. How can we deliver the desired action as close as possible to when the user knows what they want? Here, you’ve got the action (the answer) before you’ve even formulated the intent (the question). The machine anticipates you and effectively hits the “I’m feeling lucky” button after just a few keystrokes.

Speed is a competitive advantage, and time is considered the enemy in most interfaces. That’s reflected in our industry’s fascination with download and rendering speeds, though those metrics are merely offshoots of the underlying user imperative, help me get this job done quickly. “Performance isn’t the speed of the page,” says Gerry McGovern. “It’s the speed of the answer.”

But it has to be the right answer. While this approach works a treat for simple facts like weather, dates, or addresses, it starts to get hairy in more ambitious topics—particularly when those topics are contentious.

The reasonable desire for speed has to be tempered by higher-order concerns of fact and accuracy. Every data-driven service has a threshold where confidence in the data gives way to a damaging risk of being wrong. That’s the threshold where the service can no longer offer “one true answer.” Designers have to be vigilant and honest about where that tipping point lies.

The reasonable desire for speed has to be tempered by higher-order concerns of fact and accuracy.

I believe Google and the rest of the answer machines still need to tinker with their tipping-point settings. They crank the speed dial at the expense of accuracy, and so we see the confident expression of wrong or controversial answers all too often.

Still, you can see that there is a tipping point programmed into the service. For Google, that tipping point is visually represented by the presence or absence of the featured snippet, the wrapped-with-a-bow answer at the top of the page. When there’s no high-confidence answer—about 85% of the time—Google falls back to a simple, old-school list of search results. It’s John Gruber’s premise that “I don’t know” is better than a wrong answer.

Do things have to be so either-or, though? Instead of choosing between “I know the answer” and “I don’t know the answer,” perhaps the design of these services should get better at saying, “I think I know.” That brings us to the next question to ask when designing these services.

How might we convey uncertainty or ambiguity?

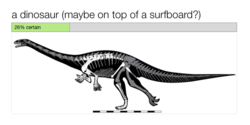

One of my favorite Twitter accounts these days is @picdescbot, a bot that describes random photos by running them through Microsoft’s computer-vision service. It generally gets in the right ballpark, but with misreads that are charmingly naive:

Other times it’s just not even close:

In all of these cases, the description is presented with the same matter-of-fact confidence. The bot is either right or it’s wrong. @picdescbot is a toy, to be sure, and a big part of its charm is in its overly confident statements. But what if we wanted to make it more nuanced—to help the visually disabled understand what’s pictured, for example?

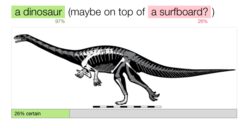

Behind the scenes, the algorithm reports a more subtle understanding. Here, the system reports 97% confidence that it’s looking at a dinosaur, but only 26% confidence that it’s on a surfboard.

Acknowledging ambiguity and uncertainty has to be an important part of designing the display of trusted systems. Prose qualifiers can help here. Instead of “a dinosaur on top of a surfboard,” a more accurate reflection of the algorithm’s confidence would be “a dinosaur (maybe on top of a surfboard?)”

Other signals could come into play, too. We could add a caption of the overall confidence of the result, supplemented by a visual indicator:

…and even do the same for individual words or comments in the description:

A similar set of quick hints might prove useful in Google’s featured snippets. For more complex topics, however, we’d likely want to know more than just how “true” the result is. We might want to see confidence on a few dimensions: the degree to which the facts of the snippet are definitive, relevant, and controversial. (There’s also reliability of the source; I’ll get to that in a bit.) I’m just noodling here, and I’m not certain those terms are right, but conceptually I’m thinking of something like:

Relevance and definitive accuracy are metrics that we’re already familiar with. The notion of controversy is more ticklish. It applies neatly to places where facts are emerging but remain in dispute. But it’s much more challenging for topics that are under siege by cynical attempts to game search results.

As I’ve wrestled with this, I’ve found the term “controversy” isn’t strong enough for those cases. Saying that women or Jews are evil isn’t “controverisal”; it’s hostile hate speech. Saying that Barack Obama isn’t an American citizen is a cynical lie. There are some cases where the data has been poisoned, where the entire topic has become a hostile zone too challenging for the algorithms to make reliable judgments.

How might we identify hostile information zones?

We live in an era of hot-button topics where truth is fiercely disputed, and where two well-intentioned people can believe a completely opposite set of “facts.” Worse, we also have ill-intentioned saboteurs spreading misinformation to create hatred or controversy where none should exist. With its awful track record on questions like “are women evil?” and “are Jews evil?”, Google has proven that algorithms aren’t up to the task of sorting out reliable facts when bad actors are fouling the information supply.

Our answer machines should do more to signal that their immune system has been compromised.

When you have malicious information viruses circulating the system, our answer machines should do more to signal that their immune system has been compromised. They should at least acknowledge that some answers are disputed and that the system might not have the judgment to determine the truth.

The moment an algorithm fails is an excellent time to supplement it with human judgment. Wikipedia’s crowd-sourced editor model generally does well at monitoring and flagging contentious topics and articles. The system is also very public about its potential problems. You can review all 6000+ of Wikipedia’s controversial/disputed pages; these are the articles that may not conform to the encyclopedia’s neutral-POV policy.

Perhaps our systems have the data to detect the signals of controversy, or maybe we need to rely on human know-how for that. Either way, whether flagged by people or robots, there are clearly some topics that demand some arm waving to alert the reader to proceed with a skeptical eye and critical mind. That’s especially true when data is faulty, controversial, incomplete, or subject to propaganda campaigns. All of these cases create hostile information zones.

In the instances when our tools can’t make sense of besieged topics, then our tools have to let us know that we can’t rely on them. The answer machines should at least provide context—even if just a flag to say proceed with caution—along with the raw source material for humans to apply some smarter interpretation. How might it change things if search results in hostile information zones said something like this:

Warning: This topic is heavily populated by propaganda sites, which may be included in these results. Read with a critical eye, and check your facts with reliable references, including [set of trusted resources].

In topics like these, we need more explicit help in evaluating the source and logic behind the answer.

How might we provide the answer’s context?

Google gives only the shallowest context for its featured snippets. Sure, the snippets are always sourced; they plainly link to the page where the answer was extracted. But there’s no sense of what that source is. Is it a Wikipedia-style reference site? A mainstream news site? A partisan propaganda site? Or perhaps a blend of each? There’s no indication unless you visit the page and establish the identity and trustworthiness of the source yourself.

But nobody follows those links. “You never want to be in that boxed result on Google,” About.com CEO Neil Vogel told me when we designed several vertical sites for the company. “Nobody clicks through on those top results. Their search stops when they see that answer.” About.com sees a steep traffic drop on search terms when their content shows up as the featured answer.

People don’t click the source links, and that’s by design. Google is explicitly trying to save you the trouble of visiting the source site. The whole idea of the featured snippet is to carve out the presumed answer from its context. Why be a middleman when you can deliver the answer directly?

The cost is that people miss the framing content that surrounds the snippet. Without that, they can’t even get a gut sense of the personality and credibility of the source. It’s just one click away, but as always: out of sight, out of mind. And the confidence of the presentation doesn’t prompt much fact-checking.

Quick descriptions of the source could be helpful. Human reporters are adept at briefly identifying their sources, suggesting both agenda and expertise: “a liberal think tank in California,” “a lobbying organization for the pharmaceuticals industry,” “a scientist studying the disease for 20 years,” “a concerned parent.” What’s the corollary for data sources? How might we “caption” sources with brief descriptions or with data that suggest the source’s particular flavor of knowledge?

Or what if we try to rate sources? One way machines gauge and reflect trust is by aggregating lots of disparate signals. As users, we tend to trust the restaurant that has four stars on 300 Yelp reviews more than we trust the restaurant that has five stars on just one. Google itself is built on a sophisticated version of just that kind of review: its original breakthrough innovation was PageRank, measuring authority by the number of inbound links a page received.

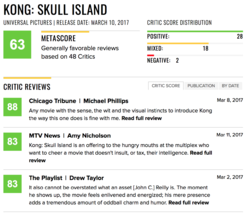

Reviews and PageRank can both be gamed, of course. One hedge for that is to identify a set of trusted reviewers. Metacritic aggregates movie ratings by professional film critics to give an establishment perspective. How might we apply something similar to track and rate the trustability of data sources?

And perhaps as important: how might we offer insight into why this is the answer or top result? “Neither Google or Facebook make their algorithms public,” Carole Cadwalldr wrote in her Guardian piece. “Why did my Google search return nine out of 10 search results that claim Jews are evil? We don’t know and we have no way of knowing.”

But it doesn’t have to be that way. Even very sophisticated data services can offer hints about why data pops up in our feed. We see it in recommendation services like Netflix (“Because you watched Stranger Things,” “TV dramas with a strong female lead”) or Amazon (“Buy it again,” “Inspired by your browsing history,” “People who bought that widget also bought these gizmos”). Even coarse insights are helpful for understanding the logic of why we see certain results.

How might we adapt to speech and other low-resolution interfaces?

The “one true answer” problem becomes especially acute when we’re speaking to Alexa or Google Home or other voice services. Voice is a super-low-resolution interface. You can’t pack anywhere near as much data into a conversation as you can on higher-resolution interfaces like paper or a webpage. You can communicate that data eventually; it just takes more time versus other modes.

With time at a premium, Alexa and Google Home call it quits at the top result. Google’s onscreen featured snippet is effectively Google Home’s entire answer.

“Google’s traditional list of search results does not translate well to voice,” writes Danny Sullivan calls, “Imagine Google Home reading you a list of 10 websites when you just want to know how many calories are in an orange.”

The calorie question, of course, is the unequivocal quick-info data that our answer machines are good at. But what about when there’s not a quick answer?

The word “set” has nearly 500 definitions in the Oxford English Dictionary. Ask Alexa for the definition, and the response takes over 60 seconds: “Set has many different meanings,” Alexa says, and then lists 15 definitions—five each for adjective, verb, and noun. The answer is at once too detailed (the dense response is hard to follow) and not detailed enough (only about 3 percent of the word’s definition).

A really good thing, though, is that Alexa kicks off by saying that there’s more than one answer: “Set has many different meanings.” It’s an immediate disclaimer that the answer is complicated. But how to better communicate that complexity?

The way human beings use speech to negotiate one another’s vast and complex data stores is through conversation and dialogue. I ask you a question and you give me some information. I ask another question to take the conversation down a specific path, and you give me more detailed information about that. And so on. A dialogue shaped like this will certainly be one way forward as these voice interfaces mature:

Me: What does “set” mean?

Alexa: “Set” has 464 definitions. Would you like to hear about nouns, verbs, or adjectives?

Me: Nouns.

Alexa: As a noun, “set” means: [three definitions]. Would you like more meanings?

Me: Would you give me an example sentence with that first definition?

Alexa: Sure. “He brought a spare set of clothes.”

At the moment, however, our voice interfaces are more call-and-response than dialogue. Ask Alexa a question, gives an answer, and then immediately forgets what you asked. There’s no follow-up; every question starts from scratch. Google Assistant is starting to get there, saving conversation “state” so you can ask follow-up questions. Other systems will follow as we transition from voice interface to truly conversational interface. Dialogue will certainly be a critical way to explore information beyond the first response.

In the meantime, the Alexa-style caveat that there’s more than one meaning can at least serve us well for flagging contentious or ambiguous content. “This topic is controversial, and there are competing views of the truth…”

Even when truly conversational interface arrives, most of us probably won’t have time for a Socratic dialogue with our living-room answer machine about the nuances of the word “set.” A second approach—and one we can apply right now—is to kick complex answers over to a higher-resolution interface: “‘Set’ has 464 definitions. I texted you a link to the complete list.” Or, “There’s not an easy answer to that. I emailed you a list of links to explore the topic.”

As we pour our data-driven systems into more and more kinds of interfaces, those interfaces have to be adept at encouraging us to switch to others that are likely more appropriate to the data we’re trying to communicate. A key to designing for low-resolution interfaces is helping to leap the gap to higher-resolution contexts.

Critical thinking is... critical

The questions I’m asking here are tuned to inquire how we might arm people with stronger signals about how much we can trust an answer machine’s response.

This approach suggests a certain faith in human beings’ capacity to kick in with critical thinking in the face of ambiguous information. I’d like to be optimistic about this, to believe that we can get people thinking about the facts they receive if we give them the proper prompts.

We’re not in a good place here, though. One study found that only 19% of college faculty can even give a clear explanation of what critical thinking is—let alone teach it. We lean hard on the answer machines and the news-entertainment industrial complex to get the facts that guide our personal and civic decisions, but too many of us are poorly equipped with skills to evaluate those facts.

So the more Google and other answer machines become the authorities of record, the more their imperfect understanding of the world becomes accepted as fact. Designers of all data-driven systems have a responsibility to ask hard questions about proper thresholds of data confidence—and how to communicate ambiguous or tainted information.

How can we make systems that are not only smart enough to know when they’re not smart enough… but smart enough to say so and signal that human judgment has to come into play?

If your company is wrestling with how to present complex data with confidence and trust, that’s exactly the kind of ambitious problem that we like to solve at Big Medium. Get in touch.