Fact Check Now Available in Google Search and News

By

Josh Clark

Published Apr 8, 2017

Google announced that the search engine has begun giving special treatment to fact-checking websites in search results:

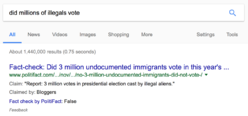

For the first time, when you conduct a search on Google that returns an authoritative result containing fact checks for one or more public claims, you will see that information clearly on the search results page. The snippet will display information on the claim, who made the claim, and the fact check of that particular claim.

So, for example, searching for “did millions of illegals vote” surfaces a fact-check article from politifact.com. The result for that article is captioned with a brief summary of the fact-check, finding the claim untrue that millions of non-citizens voted in the US presidential election:

At a minimum, this seems like it will be a useful step in flagging misinformation, or facts in dispute. The presence of one or more fact-check results in a search at least hints that there’s bad information or cynical propaganda afoot.

More broadly, this may prove to be a foundation for doing more to identify hostile information zones—toxic topics that poison our civic discourse and confuse search engines. How might the presence of these results be highlighted even more to caution the reader to be alert or skeptical when exploring this topic?

The success of this depends on Google identifying genuinely trustworthy sites to get this call-out treatment. How all of this works: behind the scenes, Google is extracting structured data inserted into the page (specifically, markup using the ClaimReview schema). Any website can insert that fact-check markup, but Google says it’s giving the new treatment only to sites “algorithmically determined to be an authoritative source.”

Algorithms can be fooled and gamed, of course, which is part of our fake-news mess in the first place. The promising step of calling out fact-check information would be seriously undermined if search results started including white-supremacist sites “fact checking” the equality of races, for example.

As I wrote in Systems Smart Enough To Know When They’re Not Smart Enough, our answer machines need to work harder at signaling when their answers may be compromised—by either widespread misinformation or even outright manipulation. As I argued there, this is a challenge of design and presentation as much as machine learning. Google’s new tweak is a small but useful first step in improving presentation.

Worth noting: this fact-check approach may help address controversies and misunderstandings. However, it does not do much for other hostile information zones—the awful results and “answers” that Google delivers if you ask it if women or Jews are evil, for example. That kind of hate is not about “disputed facts.” Our answer machines will have to find other ways to highlight the toxicity of those topics and the illegitimacy of their sources. In the meantime, this new change may at least help take down more conventional misinformation.

See also: Facebook’s efforts to flag disputed news with third-party fact checkers and to offer tips for identifying fake news.

If your company is wrestling with how to present complex data with confidence and trust, that’s exactly the kind of ambitious problem that we like to solve at Big Medium. Get in touch.